The Role of AI Voice Cloning in Digital Product and Experience Design

As digital products become more immersive and personalized, voice has emerged as a powerful design element rather than a secondary feature. What was once limited to basic system prompts or generic narration is now evolving into branded, expressive, and context-aware audio experiences. At the center of this shift is ai voice cloning, a technology that allows digital products to replicate specific human voices with high realism and consistency.

In product and experience design, voice cloning is no longer viewed only as a technical novelty. It is increasingly treated as a strategic design tool that shapes how users perceive, trust, and emotionally connect with digital interfaces.

Why Voice Matters in Modern Digital Experiences

Voice plays a unique role in human communication. Unlike text or visuals, it carries tone, emotion, personality, and nuance simultaneously. In digital environments, voice can humanize otherwise abstract systems, making interactions feel more natural and intuitive.

As products move toward conversational interfaces, such as virtual assistants, guided applications, and interactive media, voice becomes a core part of the user experience. Designers are recognizing that how something sounds can be just as important as how it looks or functions.

From Generic Narration to Brand Identity

Traditionally, digital products relied on stock voices or basic text-to-speech outputs. While functional, these voices were interchangeable and offered little differentiation. AI voice cloning changes this by allowing products to use a consistent, recognizable voice that aligns with brand identity.

In product design, this consistency helps reinforce trust and familiarity. A cloned voice can act as an auditory equivalent of a visual logo, recognizable across platforms, devices, and contexts. Whether guiding users through onboarding, explaining features, or delivering feedback, voice becomes part of the product’s personality.

Personalization and Emotional Engagement

One of the most significant impacts of AI voice cloning is personalization. Digital experiences increasingly adapt to individual users, and voice is a natural extension of that trend. A familiar or preferred voice can make interactions feel more supportive, calming, or authoritative depending on the context.

In wellness apps, learning platforms, and customer support tools, voice cloning allows experiences to feel less mechanical and more human. Designers can choose voices that match emotional intent, helping users feel understood rather than instructed.

Consistency Across Complex Product Ecosystems

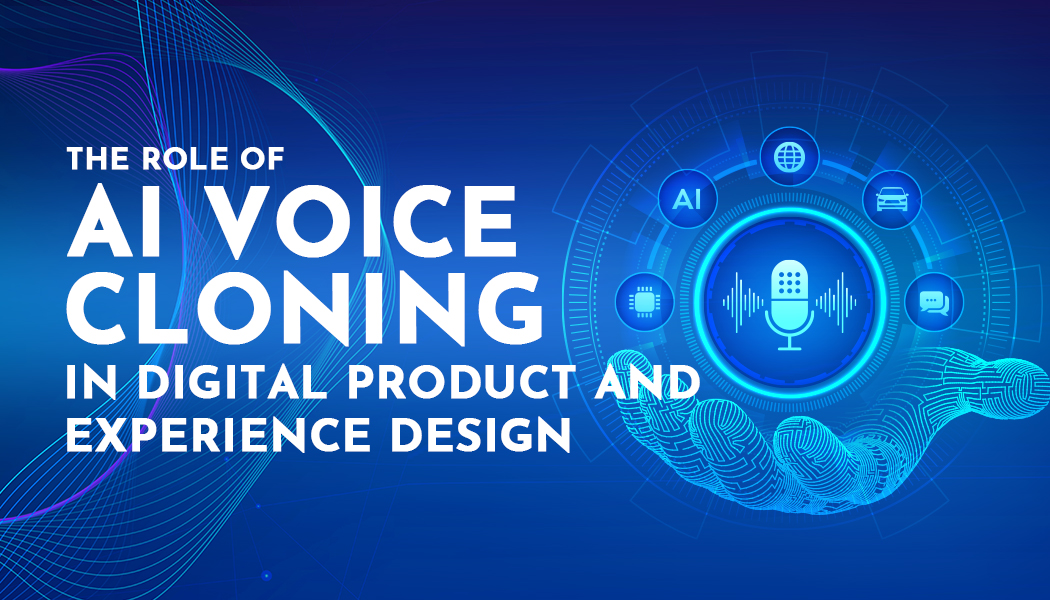

Modern digital products rarely exist in isolation. They span mobile apps, web platforms, smart devices, and embedded systems. Maintaining consistency across these touchpoints is a major challenge for experienced designers.

AI voice cloning helps solve this by providing a single voice identity that can be deployed across an entire ecosystem. Users hear the same voice whether they are interacting through a phone, browser, or voice-enabled device, reinforcing continuity and reducing cognitive friction.

Use Cases Shaping Product Design Decisions

AI voice cloning is influencing design decisions across multiple product categories. In software applications, it is used for guided walkthroughs, alerts, and contextual explanations. In media and entertainment, it supports interactive storytelling and dynamic narration. In enterprise tools, it enhances training modules and internal communications.

For designers, this means voice is no longer added late in development. It is considered early in the design process alongside interface flows, tone guidelines, and accessibility planning.

Ethical and Trust Considerations in Experience Design

With greater realism comes greater responsibility. Designers must consider how cloned voices are used and perceived. Transparency is essential; users should understand when they are interacting with an AI-generated voice rather than a live person.

Ethical design practices involve consent, appropriate use, and clear boundaries. When implemented responsibly, voice cloning can enhance trust rather than undermine it. When misused, it risks damaging user confidence and brand credibility.

This makes governance and design intent just as important as technical capability.

Accessibility and Inclusive Design Benefits

Voice-based experiences are closely tied to accessibility. AI voice cloning can support users with visual impairments, reading difficulties, or cognitive overload by providing spoken guidance and feedback.

When thoughtfully designed, voice interfaces reduce reliance on dense text and allow users to consume information in ways that suit their needs. Designers increasingly view voice not as a replacement for visual interfaces, but as a complementary layer that expands access.

Integration with Multimodal Experiences

Digital experiences are becoming multimodal, blending text, visuals, sound, and interaction. AI voice cloning fits naturally into this approach. It allows designers to synchronize voice with animations, visual cues, and user actions, creating richer and more intuitive experiences.

For example, a product tutorial may combine on-screen highlights with spoken explanations, improving comprehension and reducing onboarding friction.

Industry Perspective on Voice and Human-Centered Design

Human-centered design research consistently emphasizes the importance of communication cues that feel familiar and intuitive. According to Nielsen Norman Group, a leading authority on user experience research, conversational interfaces and voice interactions can improve usability when they align with users’ mental models and expectations.

This insight helps explain why voice cloning, when used thoughtfully, enhances rather than distracts from user experience.

The Future Role of Voice in Digital Products

As AI-generated voices become more expressive and context-aware, their role in digital product design will continue to expand. Future experiences may adapt voice tone in real time based on user behavior, environment, or emotional signals.

For designers, this means voice will increasingly be treated as a dynamic interface component rather than a static asset. Voice guidelines, testing, and iteration will become standard parts of experience design workflows.